A/B Test Results

Learn how to analyze your A/B Test results to conclude your experiments.

A/B Test Results

The A/B Test Results feature provides valuable insights from your experiments, aiding data-driven decision-making. It offers comprehensive analysis, intuitive visualization, statistical significance assessment, and variant distributed reporting. This helps users uncover insights leading to product enhancement, improved user engagement, and overall success of the A/B test.

It utilizes advanced filtration methods to ensure accurate data assessment. It includes only data captured during the user's participation in the test, excluding any data from instances where the user enters and leaves the AB test multiple times (unless the test is marked as 'Sticky'). This ensures that all the available metric data available on the Results page is clean and based on user behavior.

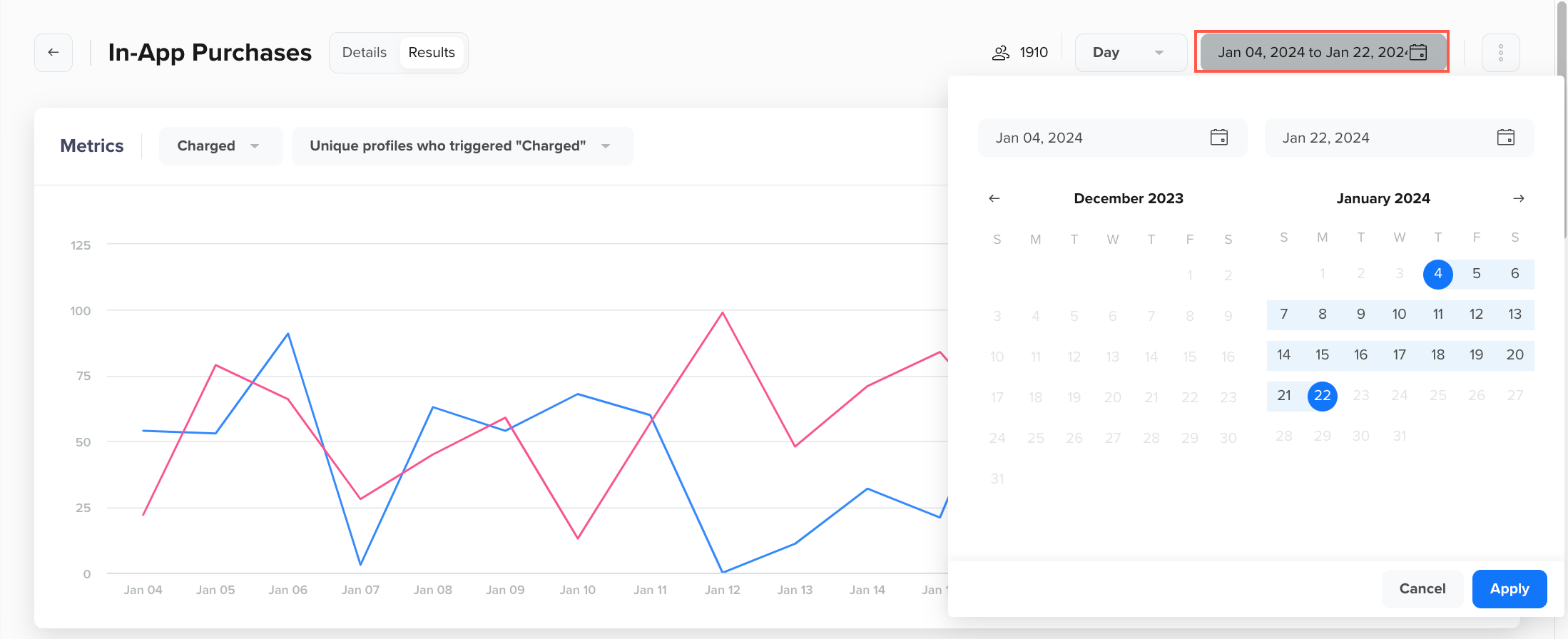

You can view the A/B Test Results for a particular period by selecting the time range from the Calendar widget in the top right corner. This helps segment and analyze data based on when events or observations occurred for the defined timeframe. You can view results for a short interval, such as a day, a week, or a month, or a longer span, such as a year or multiple years. You define the timeframe to analyze your A/B Test as follows:

Define the Time Frame using the Calendar Widget

Define the Time Frame using the Calendar Widget

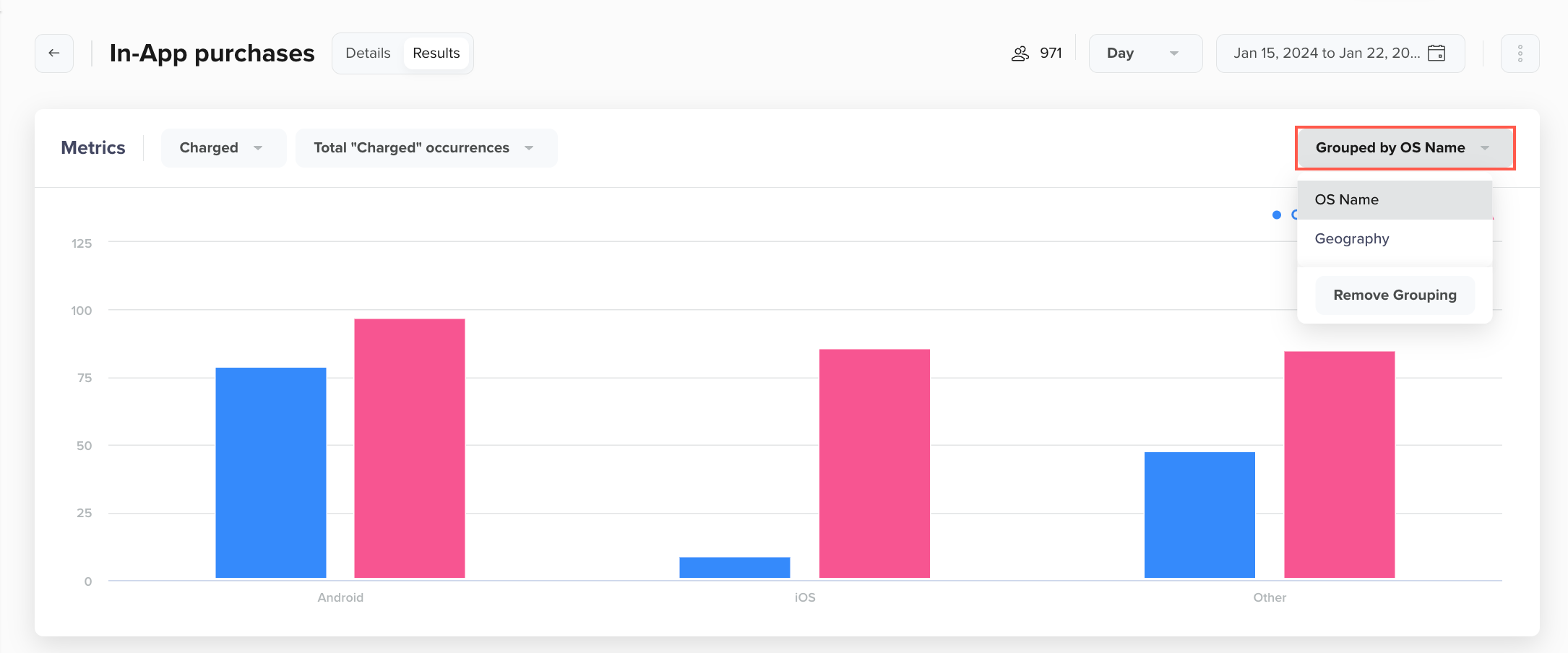

You can further categorize your data based on platform attributes such as Operating system) and User Properties using the Group by option (refer to the following image):

Group by OS Name

Group by OS Name

This helps in getting a granular view of users, allowing you to make data-driven decisions across distinct operating systems or users with different properties.

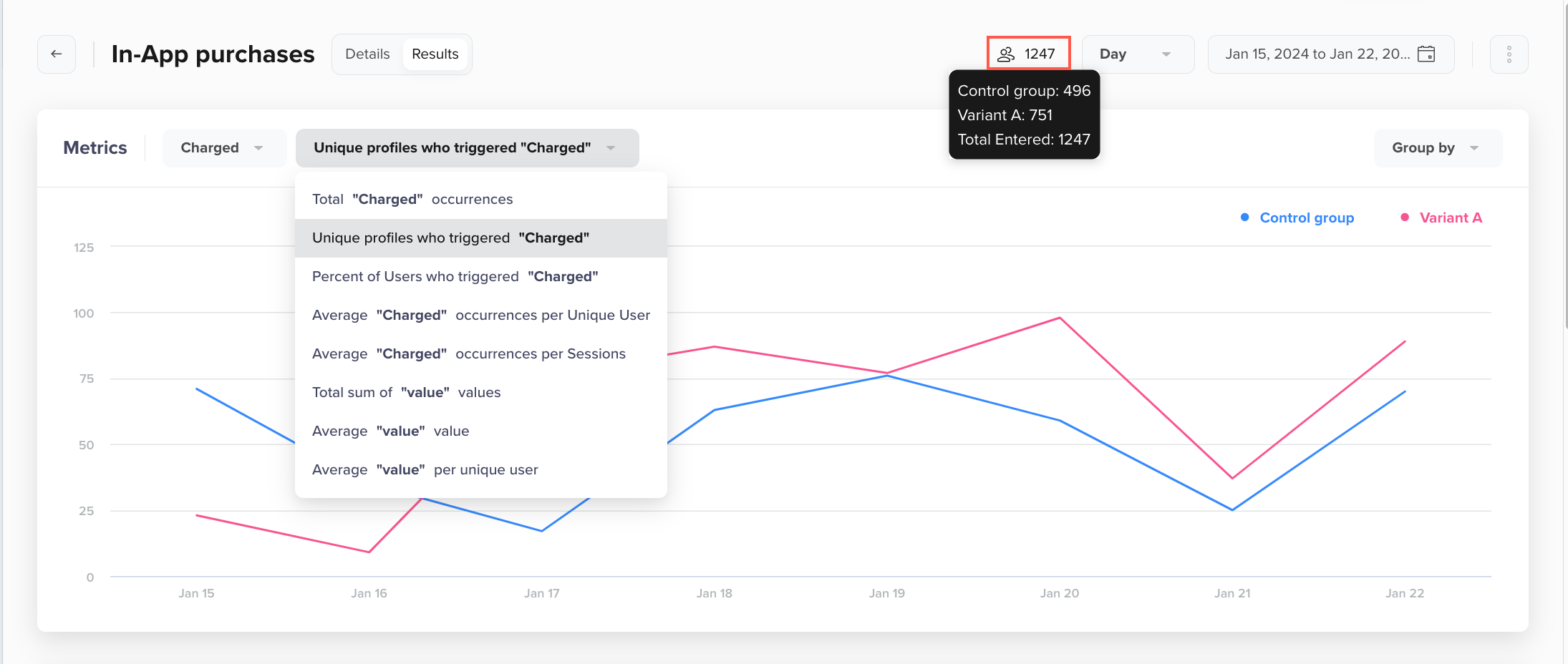

Also, hovering over the Users icon adjacent to the Results tab offers a high-level breakdown of actual users entering the A/B test across different Variants and the Control group (see the following image).

Total users entering across the variants

Total users entering across the variants

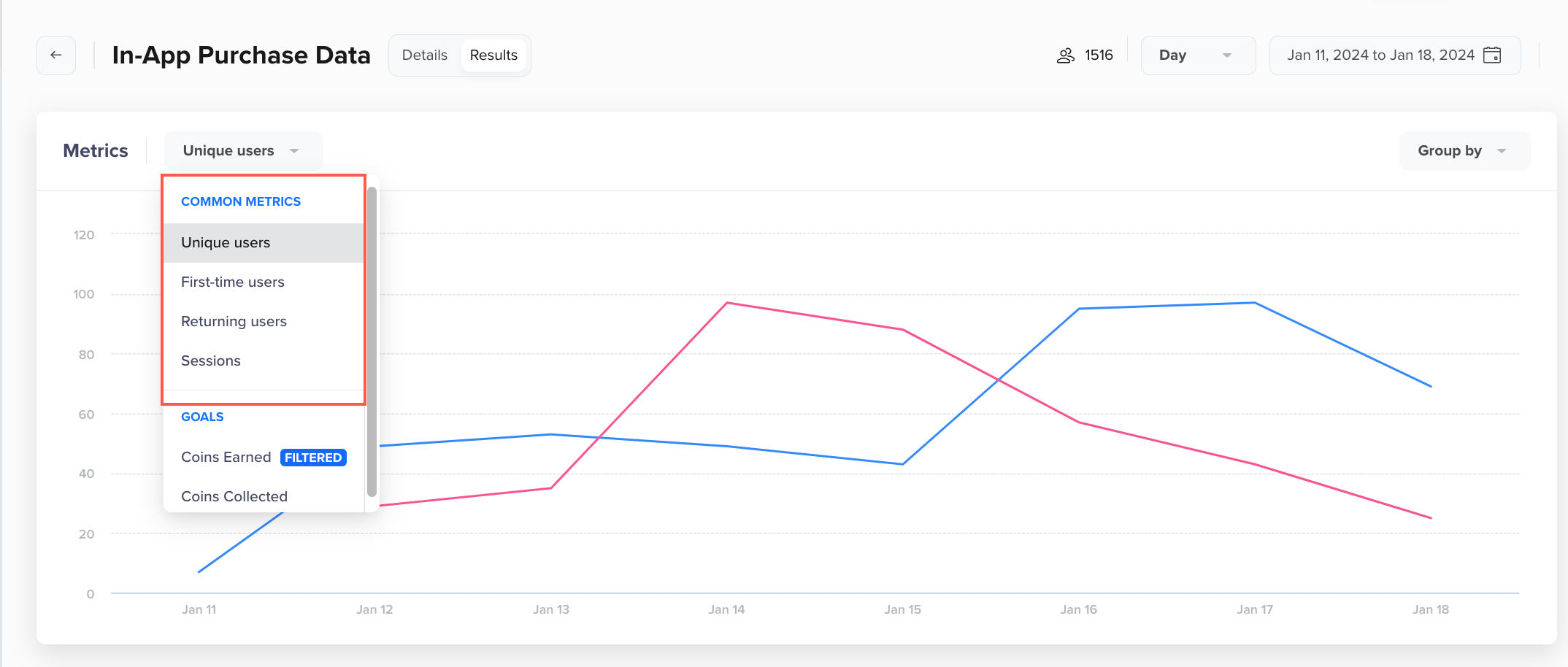

Now, the available metrics in the A/B test can be categorized into Common and Goal metrics.

Common Metrics

Common metrics are accessible for every A/B test, regardless of whether you have chosen specific Event-based Goals. The common metrics include:

- Unique Users: This refers to the count of individual users who have interacted with a website, application, or service during a specific period. Each user is counted only once, regardless of how often they visit or use the platform within that timeframe.

- Returning Users: This refers to the individuals who have visited or interacted with a website, application, or service more than once during a specific period. They are distinguished from Unique Users who have engaged with the platform on multiple occasions.

- First-time Users: This refers to the individuals who visit or interact with a website, application, or service for the first time during a specific period. These users are new to the platform and have not engaged with it before within the defined timeframe.

- Sessions: This refers to the individual visits or interactions a user has with a website, application, or service during a particular timeframe. A session starts when a user accesses the app and ends after a period of inactivity or when the user leaves the app.

Tracking sessions are a great way to measure engagement for certain apps, such as video or music streaming, news, etc. You must enable session tracking from the CleverTap dashboard to track user sessions. To enable session tracking, navigate to Settings > Session analytics and toggle ON the session tracking.

Common Metrics View

Common Metrics View

Listed below are some key session metrics, along with their formulas and definitions:

| Metric | Formula | Definition |

|---|---|---|

| Returning Users | 100 x [(Total Unique Users) - (Total First-time users)] / (Total Unique Users) | The percentage of users returning to your application or website. |

| Total Sessions | Total occurrences of "Session Concluded" event | The total number of user sessions. |

| Total Session Length | Sum of values for the event property length of the Session Concluded event | Calculated using the event property length of the Session Concluded event. The total length of all sessions. |

| Average Session Length | (Total Session Length) / (Total Sessions ) | The average length of a session. |

| Average Session Length per User | (Total Session Length) / (Total Unique Users ) | The average length of a user session in your application/website. |

| Average Sessions per User | (Total Session ) / (Total Unique Users ) | The average number of sessions for a user. |

Goal metrics

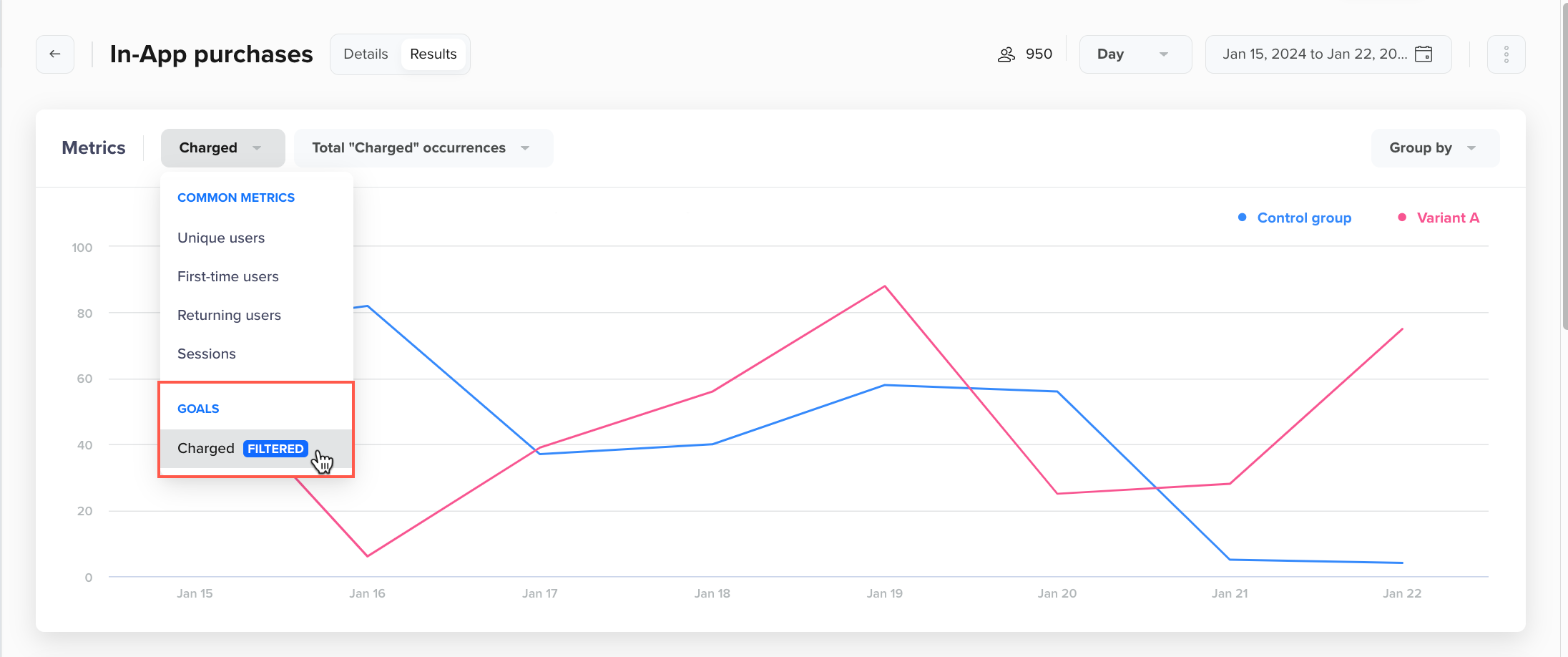

Goal metrics are specific to the event and properties selected in the A/B test Goals. You can find these from the Metrics dropdown on the left.

Goal Metrics

Goal Metrics

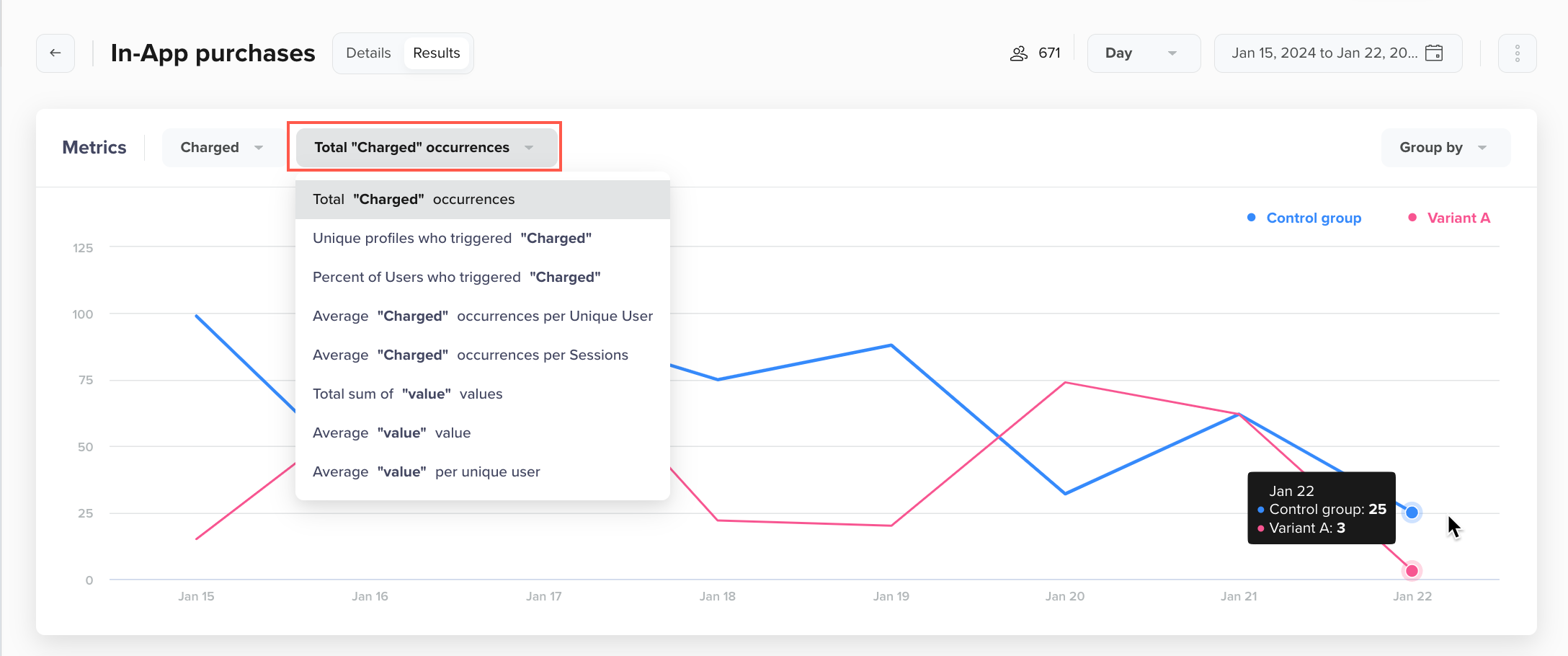

Listed below are some key goal metrics, along with their formulas and definitions:

Metric | Formula | Definition |

|---|---|---|

Total <Goal event> occurrences | Total occurrences of <Goal event> | The total number of times the goal event was triggered. |

Unique profile who triggered <Goal event> | Number of Users who triggered <Goal event> | Total number of users who triggered the goal event. |

Percent of Users who triggered <Goal Event> | Percent of users who triggered the <Goal Event> = | The percentage of users in your app that triggered the goal event. |

Average occurrences of <Goal event> per Unique User | (Total occurrences of <GoalEvent> / Total Unique Users) | The average number of times a user triggers this event. |

Average occurrences of <Goal event> per Session | (Total occurrences of <GoalEvent> / Total Sessions) | The average number of times the goal event is triggered in a session. |

Total sum of <Event Property> values | (Total sum of <Event Property> values) | Total sum of all aggregate event property values associated with the goal event. |

Average of <Event Property> values | (Total sum of <Event Property> values / Total Event occurrences) | The average value of each occurrence of this event. |

Average of <Event Property> values per unique user | (Total sum of <Event Property> values / Unique Users) | The average of <Event Property> values of the goal event per user. |

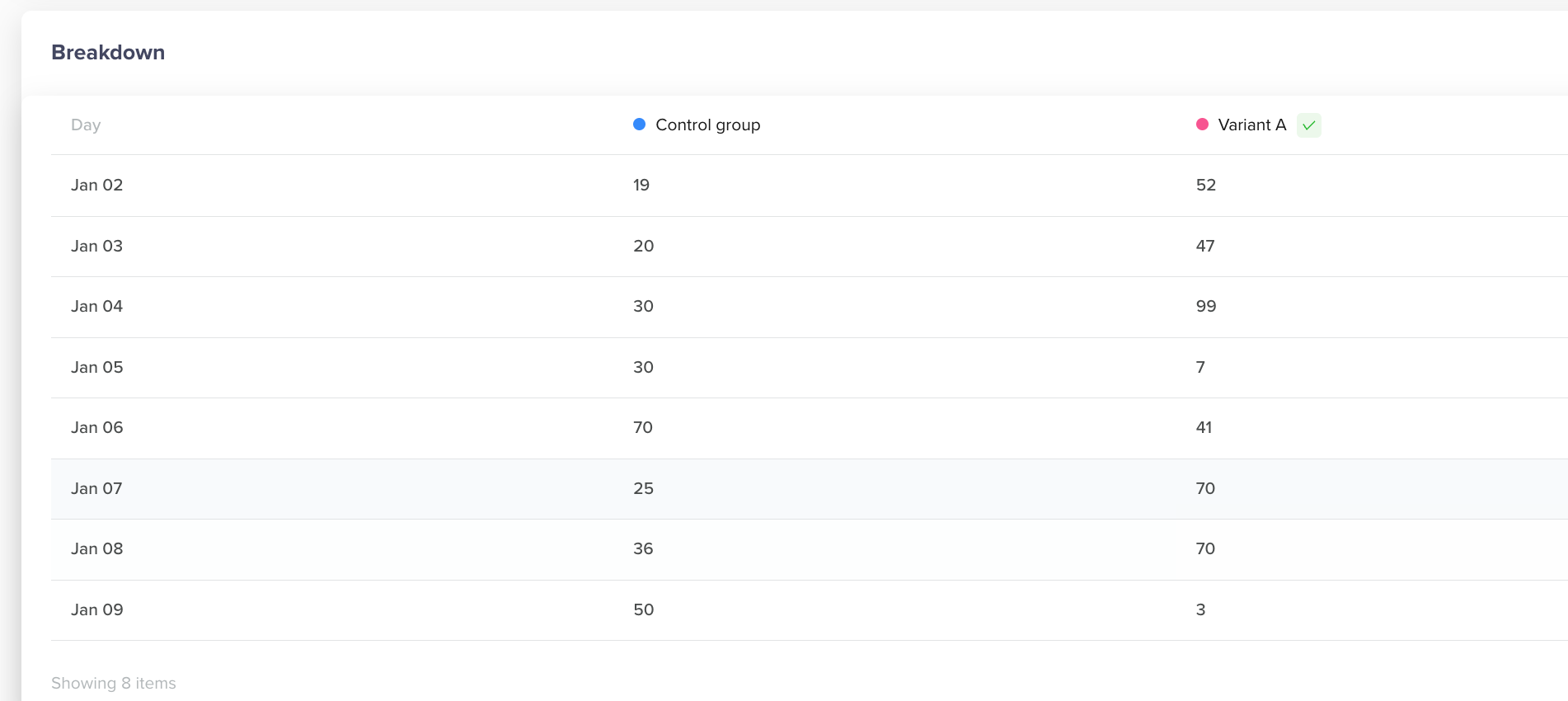

After you select the event metric of your choice from the dropdown, you can view its data in graphical and tabular format. Let us consider a sample test where an e-commerce business wants to analyze the sales. In this case, you add an event Charged as a Goal with aggregate property value. The following image shows the set of available metrics for the Charged event:

Graphical Data View of Charged Event

Tabular data view for the Charged Event

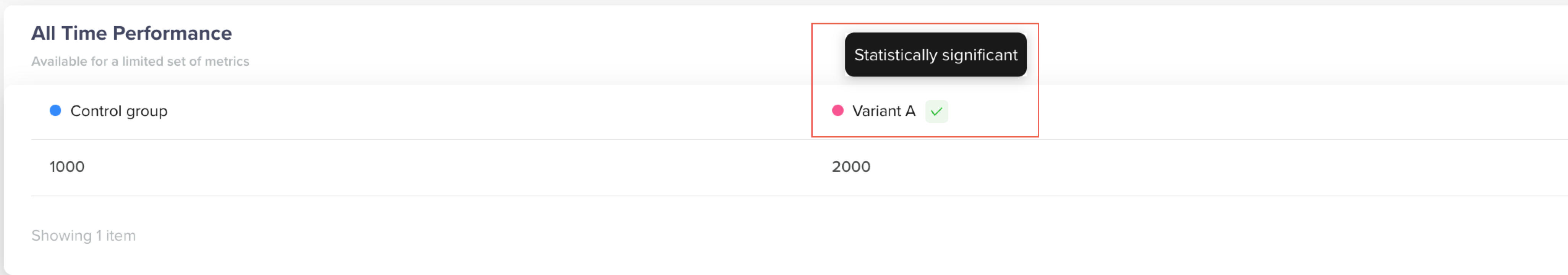

All Time Performance

For a limited set of metrics (Percent of users who triggered the <Event>), you can see the All Time Performance section.

This feature calculates data throughout the entire duration of the A/B Test, irrespective of the time frame selected at the top of the page. Based on all the data, each variant is analyzed to identify if it is statistically significant, and an indicator is displayed next to the variants that helps:

- Validate the reliability of the results.

- Assess whether any observed differences between the control and variant groups are not merely attributable to random chance.

- Provides you confidence in the decision-making process.

NoteCleverTap uses a two-tailed Welch's t-test with a default setting of 95% confidence interval to identify the statistically significant variant.

All Time Performance Section for a Limited Set of Metrics

Updated about 2 months ago